Language model integration

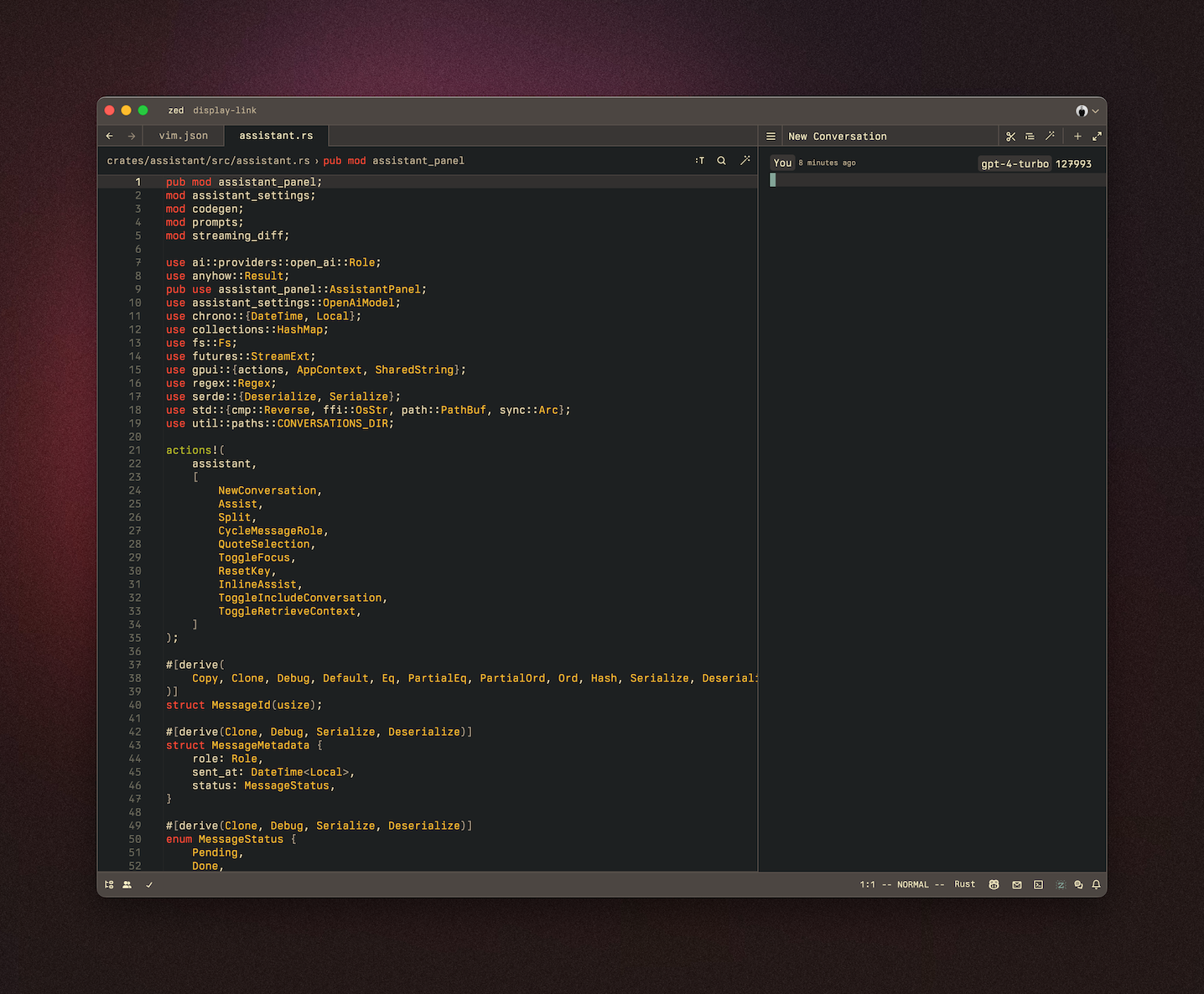

Assistant Panel

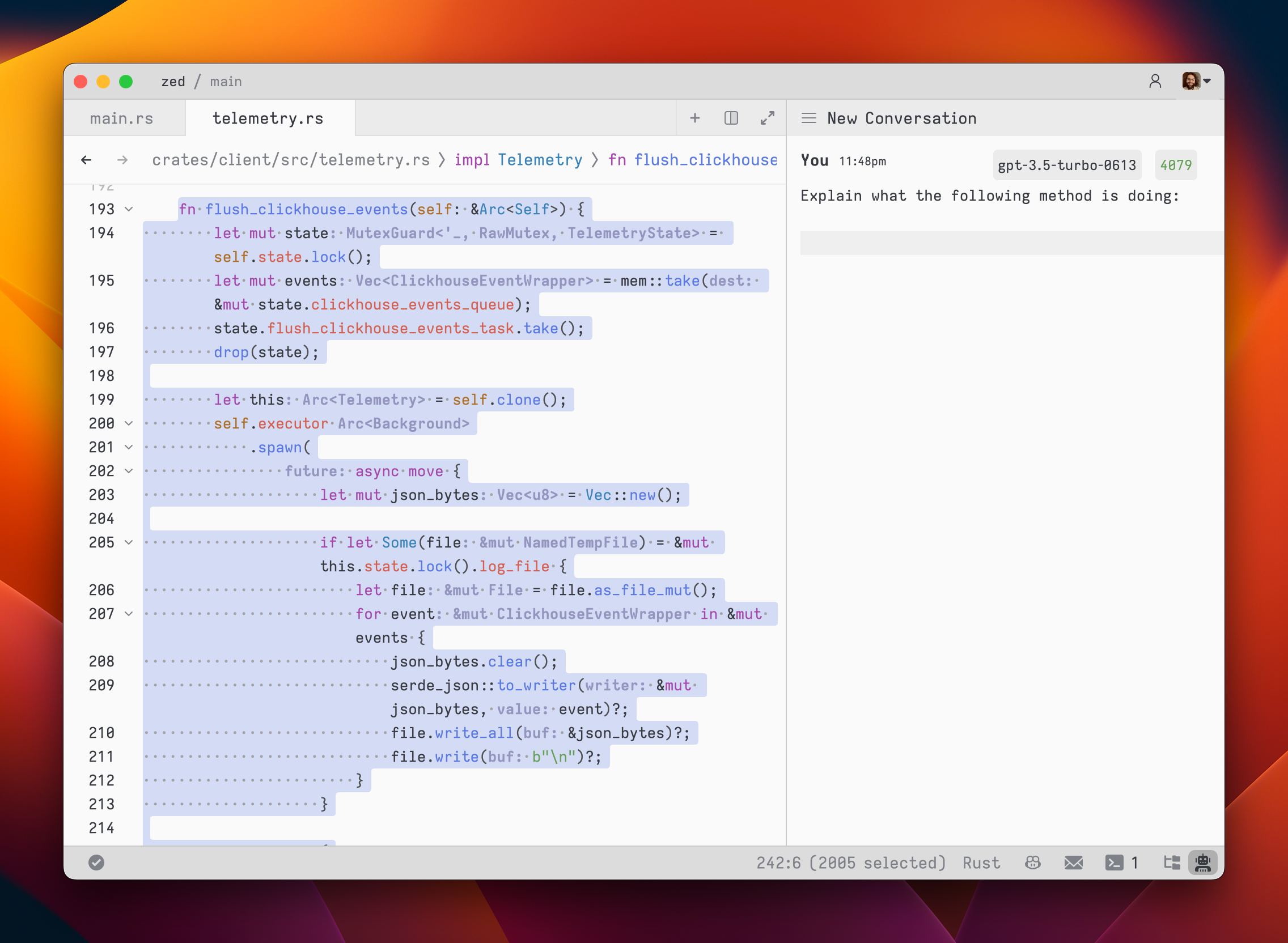

The assistant panel provides you with a way to interact with large language models. The assistant is good for various tasks, such as generating code, asking questions about existing code, and even writing plaintext, such as emails and documentation. To open the assistant panel, toggle the right dock by using the workspace: toggle right dock action in the command palette or by using the cmd-r (Mac) or ctrl-alt-b (Linux) shortcut.

Note: A custom key binding can be set to toggle the right dock.

Setup

- OpenAI API Setup Instructions

- OpenAI API Custom Endpoint

- Ollama Setup Instructions

- Anthropic API Setup Instructions

- Google Gemini API Setup Instructions

- GitHub Copilot Chat

Having a conversation

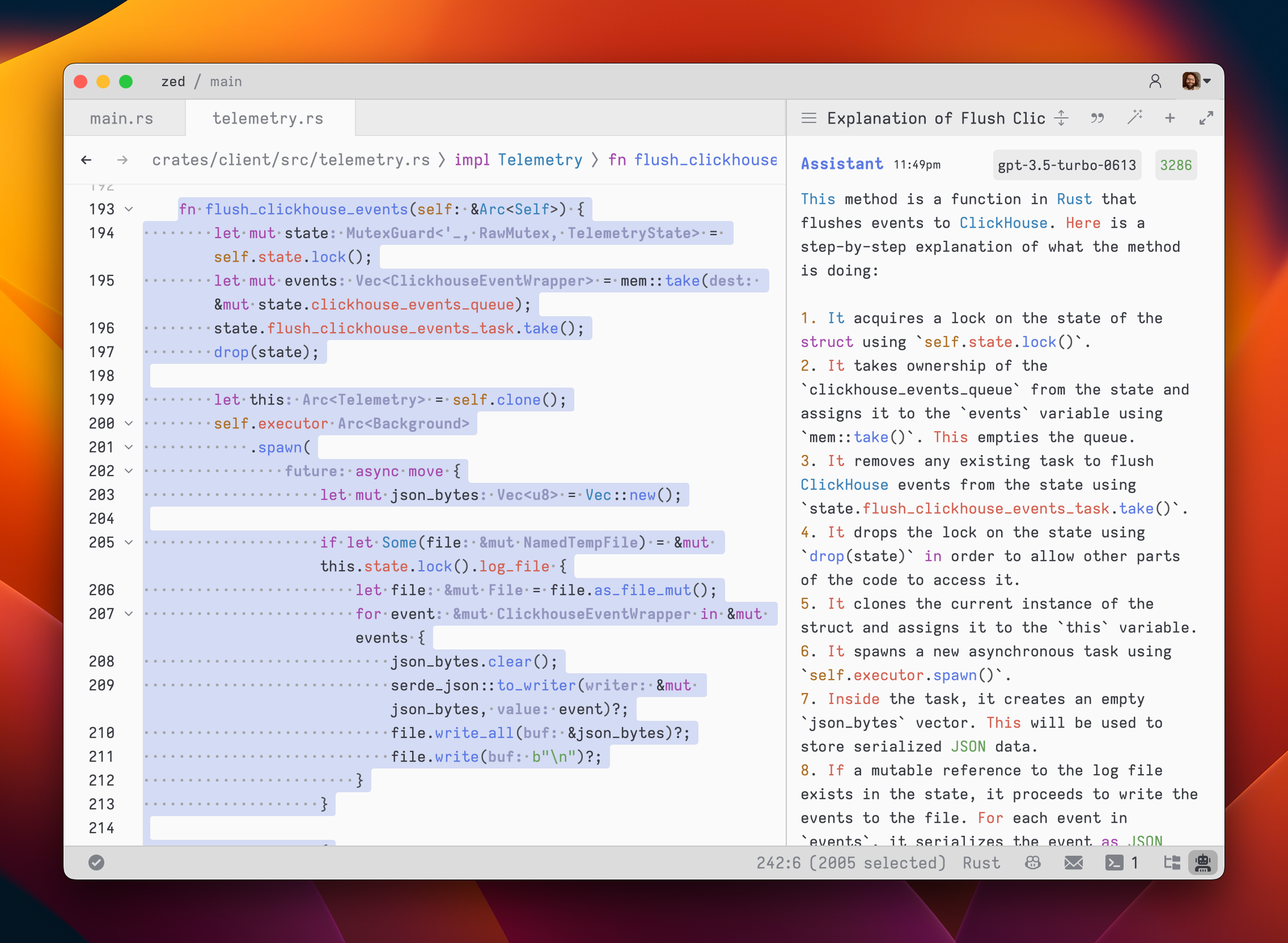

The assistant editor in Zed functions similarly to any other editor. You can use custom key bindings and work with multiple cursors, allowing for seamless transitions between coding and engaging in discussions with the language models. However, the assistant editor differs with the inclusion of message blocks. These blocks serve as containers for text that correspond to different roles within the conversation. These roles include:

YouAssistantSystem

To begin, select a model and type a message in a You block.

As you type, the remaining tokens count for the selected model is updated.

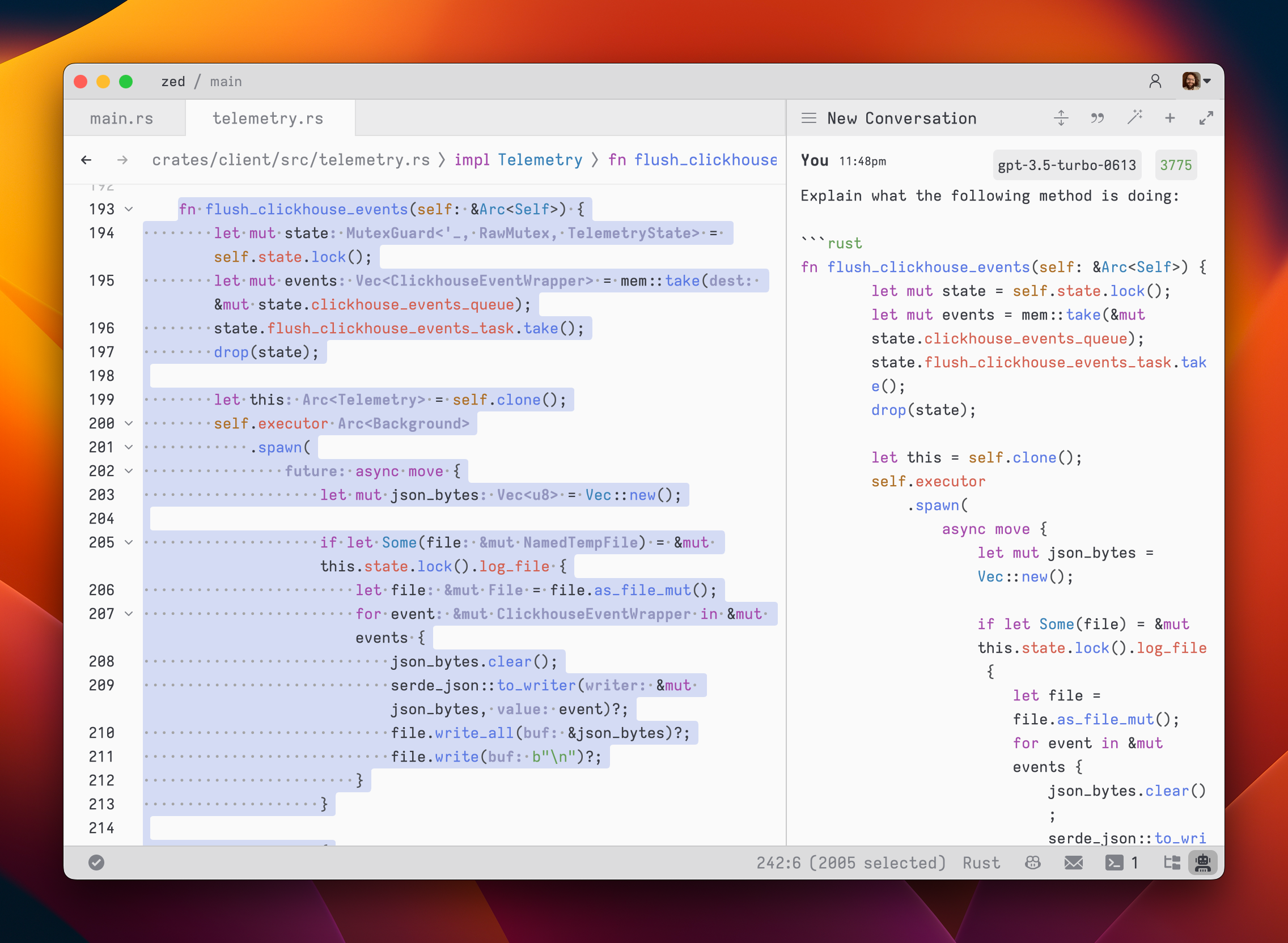

Inserting text from an editor is as simple as highlighting the text and running cmd-> (assistant: quote selection); Zed will wrap it in a fenced code block if it is code.

To submit a message, use cmd-enter (assistant: assist). Unlike typical chat applications where pressing enter would submit the message, in the assistant editor, our goal was to make it feel as close to a regular editor as possible. So, pressing enter simply inserts a new line.

After submitting a message, the assistant's response will be streamed below, in an Assistant message block.

The stream can be canceled at any point with escape. This is useful if you realize early on that the response is not what you were looking for.

If you want to start a new conversation at any time, you can hit cmd-n or use the New Context menu option in the hamburger menu at the top left of the panel.

Simple back-and-forth conversations work well with the assistant. However, there may come a time when you want to modify the previous text in the conversation and steer it in a different direction.

Editing a conversation

The assistant gives you the flexibility to have control over the conversation. You can freely edit any previous text, including the responses from the assistant. If you want to remove a message block entirely, simply place your cursor at the beginning of the block and use the delete key. A typical workflow might involve making edits and adjustments throughout the conversation to refine your inquiry or provide additional context. Here's an example:

- Write text in a

Youblock. - Submit the message with

cmd-enter - Receive an

Assistantresponse that doesn't meet your expectations - Cancel the response with

escape - Erase the content of the

Assistantmessage block and remove the block entirely - Add additional context to your original message

- Submit the message with

cmd-enter

Being able to edit previous messages gives you control over how tokens are used. You don't need to start up a new context to correct a mistake or to add additional context and you don't have to waste tokens by submitting follow-up corrections.

Some additional points to keep in mind:

- You are free to change the model type at any point in the conversation.

- You can cycle the role of a message block by clicking on the role, which is useful when you receive a response in an

Assistantblock that you want to edit and send back up as aYoublock.

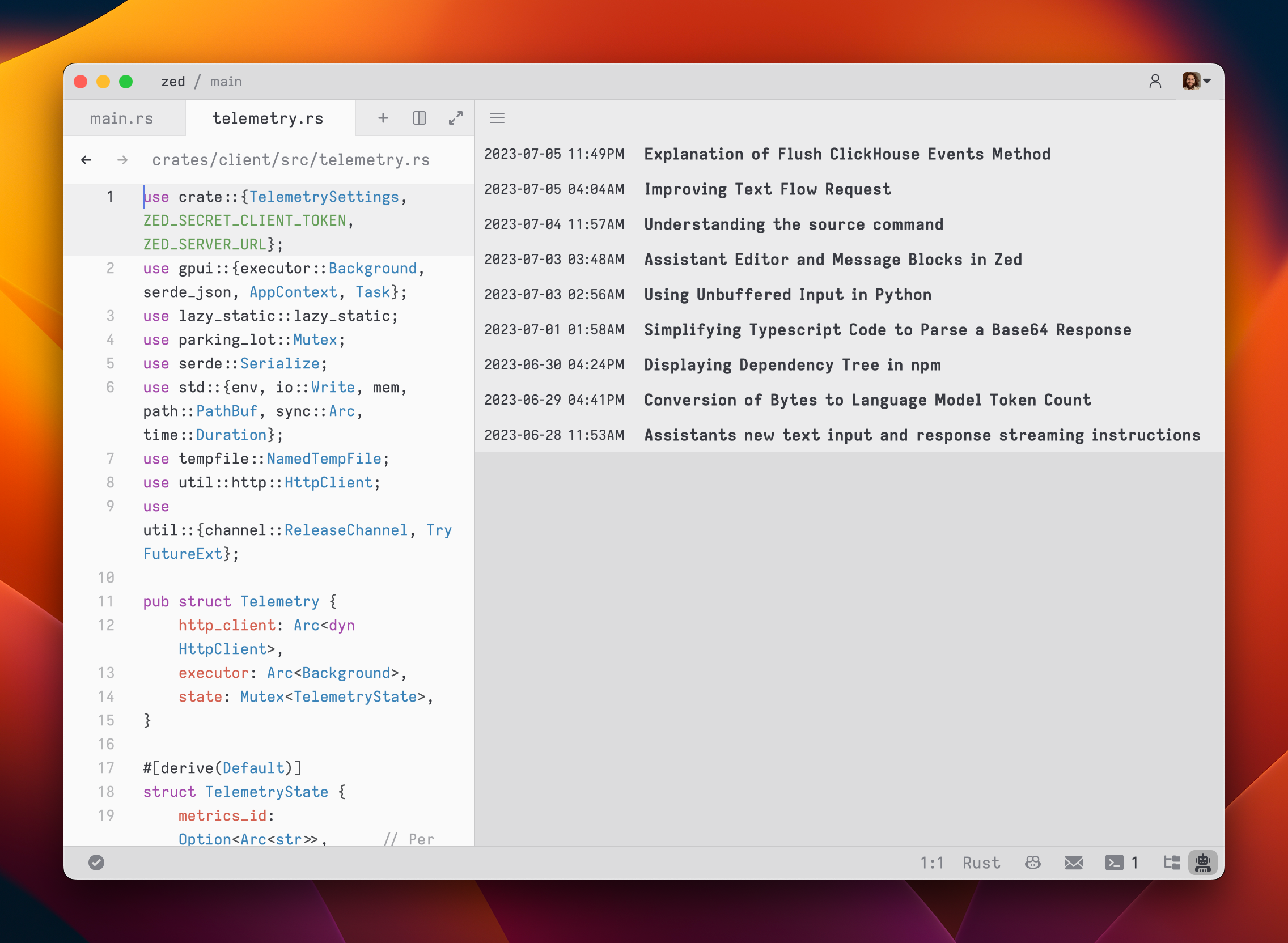

Saving and loading conversations

After you submit your first message, a name for your conversation is generated by the language model, and the conversation is automatically saved to your file system in ~/.config/zed/conversations. You can access and load previous messages by clicking on the hamburger button in the top-left corner of the assistant panel.

Inline generation

You can generate and transform text in any editor by selecting text and pressing ctrl-enter.

You can also perform multiple generation requests in parallel by pressing ctrl-enter with multiple cursors, or by pressing ctrl-enter with a selection that spans multiple excerpts in a multibuffer.

To create a custom keybinding that prefills a prompt, you can add the following format in your keymap:

[

{

"context": "Editor && mode == full",

"bindings": {

"ctrl-shift-enter": [

"assistant::InlineAssist",

{ "prompt": "Build a snake game" }

]

}

}

]

Advanced: Overriding prompt templates

Zed allows you to override the default prompts used for various assistant features by placing custom Handlebars (.hbs) templates in your ~/.config/zed/prompts/templates directory. The following templates can be overridden:

-

content_prompt.hbs: Used for generating content in the editor. Format:You are an AI programming assistant. Your task is to {{#if is_insert}}insert{{else}}rewrite{{/if}} {{content_type}}{{#if language_name}} in {{language_name}}{{/if}} based on the following context and user request. Context: {{#if is_truncated}} [Content truncated...] {{/if}} {{document_content}} {{#if is_truncated}} [Content truncated...] {{/if}} User request: {{user_prompt}} {{#if rewrite_section}} Please rewrite the section enclosed in <rewrite_this></rewrite_this> tags. {{else}} Please insert your response at the <insert_here></insert_here> tag. {{/if}} Provide only the {{content_type}} content in your response, without any additional explanation. -

terminal_assistant_prompt.hbs: Used for the terminal assistant feature. Format:You are an AI assistant for a terminal emulator. Provide helpful responses to user queries about terminal commands, file systems, and general computer usage. System information: - Operating System: {{os}} - Architecture: {{arch}} {{#if shell}} - Shell: {{shell}} {{/if}} {{#if working_directory}} - Current Working Directory: {{working_directory}} {{/if}} Latest terminal output: {{#each latest_output}} {{this}} {{/each}} User query: {{user_prompt}} Provide a clear and concise response to the user's query, considering the given system information and latest terminal output if relevant. -

edit_workflow.hbs: Used for generating the edit workflow prompt. -

step_resolution.hbs: Used for generating the step resolution prompt.

You can customize these templates to better suit your needs while maintaining the core structure and variables used by Zed. Zed will automatically reload your prompt overrides when they change on disk. Consult Zed's assets/prompts directory for current versions you can play with.

Be sure you want to override these, as you'll miss out on iteration on our built in features. This should be primarily used when developing Zed.

Setup Instructions

OpenAI

-

Create an OpenAI API key

-

Make sure that your OpenAI account has credits

-

Open the assistant panel, using either the

assistant: toggle focusor theworkspace: toggle right dockaction in the command palette (cmd-shift-p). -

Make sure the assistant panel is focused:

The OpenAI API key will be saved in your keychain.

Zed will also use the OPENAI_API_KEY environment variable if it's defined.

OpenAI Custom Endpoint

You can use a custom API endpoint for OpenAI, as long as it's compatible with the OpenAI API structure.

To do so, add the following to your Zed settings.json:

{

"language_models": {

"openai": {

"api_url": "http://localhost:11434/v1"

}

}

}

The custom URL here is http://localhost:11434/v1.

Ollama

Download and install ollama from ollama.com/download (Linux or MacOS) and ensure it's running with ollama --version.

You can use Ollama with the Zed assistant by making Ollama appear as an OpenAPI endpoint.

-

Download, for example, the

mistralmodel with Ollama:ollama pull mistral -

Make sure that the Ollama server is running. You can start it either via running the Ollama app, or launching:

ollama serve -

In the assistant panel, select one of the Ollama models using the model dropdown.

-

(Optional) If you want to change the default url that is used to access the Ollama server, you can do so by adding the following settings:

{

"language_models": {

"ollama": {

"api_url": "http://localhost:11434"

}

}

}

Anthropic

You can use Claude 3.5 Sonnet with the Zed assistant by choosing it via the model dropdown in the assistant panel.

You can obtain an API key here.

Even if you pay for Claude Pro, you will still have to pay for additional credits to use it via the API.

Google Gemini

You can use Gemini 1.5 Pro/Flash with the Zed assistant by choosing it via the model dropdown in the assistant panel.

You can obtain an API key here.

GitHub Copilot

You can use GitHub Copilot chat with the Zed assistant by choosing it via the model dropdown in the assistant panel.