docs/src/assistant.md 🔗

@@ -1 +0,0 @@

-# Assistant

Bennet Bo Fenner and Piotr created

- Fix links on assistant page to subpages

- Mention the configuration view in the `configuration.md` and document

more settings

Release Notes:

- N/A

---------

Co-authored-by: Piotr <piotr@zed.dev>docs/src/assistant.md | 1

docs/src/assistant/assistant.md | 12

docs/src/assistant/commands.md | 20 --

docs/src/assistant/configuration.md | 227 +++++++++++++++++++++---------

4 files changed, 169 insertions(+), 91 deletions(-)

@@ -1 +0,0 @@

-# Assistant

@@ -4,14 +4,14 @@ The Assistant is a powerful tool that integrates large language models into your

This section covers various aspects of the Assistant:

-- [Assistant Panel](/assistant/assistant-panel.md): Create and collaboratively edit new contexts, and manage interactions with language models.

+- [Assistant Panel](./assistant-panel.md): Create and collaboratively edit new contexts, and manage interactions with language models.

-- [Inline Assistant](/assistant/inline-assistant.md): Discover how to use the Assistant to power inline transformations directly within your code editor and terminal.

+- [Inline Assistant](./inline-assistant.md): Discover how to use the Assistant to power inline transformations directly within your code editor and terminal.

-- [Providers & Configuration](/assistant/configuration.md): Configure the Assistant, and set up different language model providers like Anthropic, OpenAI, Ollama, Google Gemini, and GitHub Copilot Chat.

+- [Providers & Configuration](./configuration.md): Configure the Assistant, and set up different language model providers like Anthropic, OpenAI, Ollama, Google Gemini, and GitHub Copilot Chat.

-- [Introducing Contexts](/assistant/contexts.md): Learn about contexts (similar to conversations), and learn how they power your interactions between you, your project, and the assistant/model.

+- [Introducing Contexts](./contexts.md): Learn about contexts (similar to conversations), and learn how they power your interactions between you, your project, and the assistant/model.

-- [Using Commands](/assistant/commands.md): Explore slash commands that enhance the Assistant's capabilities and future extensibility.

+- [Using Commands](./commands.md): Explore slash commands that enhance the Assistant's capabilities and future extensibility.

-- [Prompting & Prompt Library](/assistant/prompting.md): Learn how to write and save prompts, how to use the Prompt Library, and how to edit prompt templates.

+- [Prompting & Prompt Library](./prompting.md): Learn how to write and save prompts, how to use the Prompt Library, and how to edit prompt templates.

@@ -66,15 +66,6 @@ Usage: `/prompt <prompt_name>`

Related: `/default`

-## `/search` (Not generally available)

-

-The `/search` command performs a semantic search for content in your project based on natural language queries. This allows you to find relevant code or documentation within your project.

-

-Usage: `/search <query> [--n <limit>]`

-

-- `query`: The natural language query to search for.

-- `--n <limit>`: Optional flag to limit the number of results returned.

-

## `/symbols`

The `/symbols` command inserts the active symbols (functions, classes, etc.) from the current tab into the context. This is useful for getting an overview of the structure of the current file.

@@ -100,11 +91,11 @@ Examples:

The `/terminal` command inserts a select number of lines of output from the terminal into the context. This is useful for referencing recent command outputs or logs.

-Usage: `/terminal [--line-count <number>]`

+Usage: `/terminal [<number>]`

-- `--line-count <number>`: Optional flag to specify the number of lines to insert (default is a predefined number).

+- `<number>`: Optional parameter to specify the number of lines to insert (default is a 50).

-## `/workflow` (Not generally available)

+## `/workflow`

The `/workflow` command inserts a prompt that opts into the edit workflow. This sets up the context for the assistant to suggest edits to your code.

@@ -112,6 +103,5 @@ Usage: `/workflow`

## Extensibility

-The Zed team plans for assistant commands to be extensible, but this isn't quite ready yet. Stay tuned!

-

-Zed is open source, and all the slash commands are defined in the [assistant crate](https://github.com/zed-industries/zed/tree/main/crates/assistant/src/slash_command). If you are interested in creating your own slash commands a good place to start is by learning from the existing commands.

+A Zed extension can expose custom slash commands in it's API; this means that you too can have your own slash commands.

+Click [here](../extensions/slash-commands.md) to find out how to define them.

@@ -1,68 +1,25 @@

# Configuring the Assistant

-## Settings

-

-| key | type | default | description |

-| ------------- | ------ | ------- | ----------------------------- |

-| version | string | "2" | The version of the assistant. |

-| default_model | object | {} | The default model to use. |

-

-### Configuring the default model

-

-The `default_model` object can contain the following keys:

-

-```json

-// settings.json

-{

- "assistant": {

- "default_model": {

- "provider": "zed.dev",

- "model": "claude-3-5-sonnet"

- }

- }

-}

-```

-

-## Common Panel Settings

-

-| key | type | default | description |

-| -------------- | ------- | ------- | ------------------------------------------------------------------------------------- |

-| enabled | boolean | true | Disabling this will completely disable the assistant |

-| button | boolean | true | Show the assistant icon |

-| dock | string | "right" | The default dock position for the assistant panel. Can be ["left", "right", "bottom"] |

-| default_height | string | null | The pixel height of the assistant panel when docked to the bottom |

-| default_width | string | null | The pixel width of the assistant panel when docked to the left or right |

-

-## Example Configuration

-

-```json

-// settings.json

-{

- "assistant": {

- "default_model": {

- "provider": "zed.dev",

- "model": "claude-3-5-sonnet-20240620"

- },

- "version": "2",

- "button": true,

- "default_width": 480,

- "dock": "right",

- "enabled": true

- }

-}

-```

-

## Providers {#providers}

The following providers are supported:

-- Zed AI (Configured by default when signed in)

+- [Zed AI (Configured by default when signed in)](#zed-ai)

- [Anthropic](#anthropic)

-- [GitHub Copilot Chat](#github-copilot-chat)

-- [Google Gemini](#google-gemini)

+- [GitHub Copilot Chat](#github-copilot-chat) [^1]

+- [Google Gemini](#google-gemini) [^1]

- [Ollama](#ollama)

- [OpenAI](#openai)

-- [OpenAI Custom Endpoint](#openai-custom-endpoint)

+

+To configure different providers, run `assistant: show configuration` in the command palette, or click on the hamburger menu at the top-right of the assistant panel and select "Configure".

+

+[^1]: This provider does not support [`/workflow`](./commands#workflow-not-generally-available) command.

+

+To further customize providers, you can use `settings.json` to do that as follows:

+

+- [Configuring endpoints](#custom-endpoint)

+- [Configuring timeouts](#provider-timeout)

+- [Configuring default model](#default-model)

### Zed AI {#zed-ai}

@@ -72,10 +29,42 @@ A hosted service providing convenient and performant support for AI-enabled codi

You can use Claude 3.5 Sonnet via [Zed AI](#zed-ai) for free. To use other Anthropic models you will need to configure it by providing your own API key.

-You can obtain an API key [here](https://console.anthropic.com/settings/keys).

+1. You can obtain an API key [here](https://console.anthropic.com/settings/keys).

+2. Make sure that your Anthropic account has credits

+3. Open the configuration view (`assistant: show configuration`) and navigate to the Anthropic section

+4. Enter your Anthropic API key

Even if you pay for Claude Pro, you will still have to [pay for additional credits](https://console.anthropic.com/settings/plans) to use it via the API.

+#### Anthropic Custom Models {#anthropic-custom-models}

+

+You can add custom models to the Anthropic provider, by adding the following to your Zed `settings.json`:

+

+```json

+{

+ "language_models": {

+ "anthropic": {

+ "available_models": [

+ {

+ "name": "some-model",

+ "display_name": "some-model",

+ "max_tokens": 128000,

+ "max_output_tokens": 2560,

+ "cache_configuration": {

+ "max_cache_anchors": 10,

+ "min_total_token": 10000,

+ "should_speculate": false

+ },

+ "tool_override": "some-model-that-supports-toolcalling"

+ }

+ ]

+ }

+ }

+}

+```

+

+Custom models will be listed in the model dropdown in the assistant panel.

+

### GitHub Copilot Chat {#github-copilot-chat}

You can use GitHub Copilot chat with the Zed assistant by choosing it via the model dropdown in the assistant panel.

@@ -86,6 +75,27 @@ You can use Gemini 1.5 Pro/Flash with the Zed assistant by choosing it via the m

You can obtain an API key [here](https://aistudio.google.com/app/apikey).

+#### Google Gemini Custom Models {#google-custom-models}

+

+You can add custom models to the OpenAI provider, by adding the following to your Zed `settings.json`:

+

+```json

+{

+ "language_models": {

+ "google": {

+ "available_models": [

+ {

+ "name": "custom-model",

+ "max_tokens": 128000

+ }

+ ]

+ }

+ }

+}

+```

+

+Custom models will be listed in the model dropdown in the assistant panel.

+

### Ollama {#ollama}

Download and install Ollama from [ollama.com/download](https://ollama.com/download) (Linux or macOS) and ensure it's running with `ollama --version`.

@@ -119,35 +129,114 @@ You can use Ollama with the Zed assistant by making Ollama appear as an OpenAPI

### OpenAI {#openai}

-<!--

-TBD: OpenAI Setup flow: Review/Correct/Simplify

--->

-

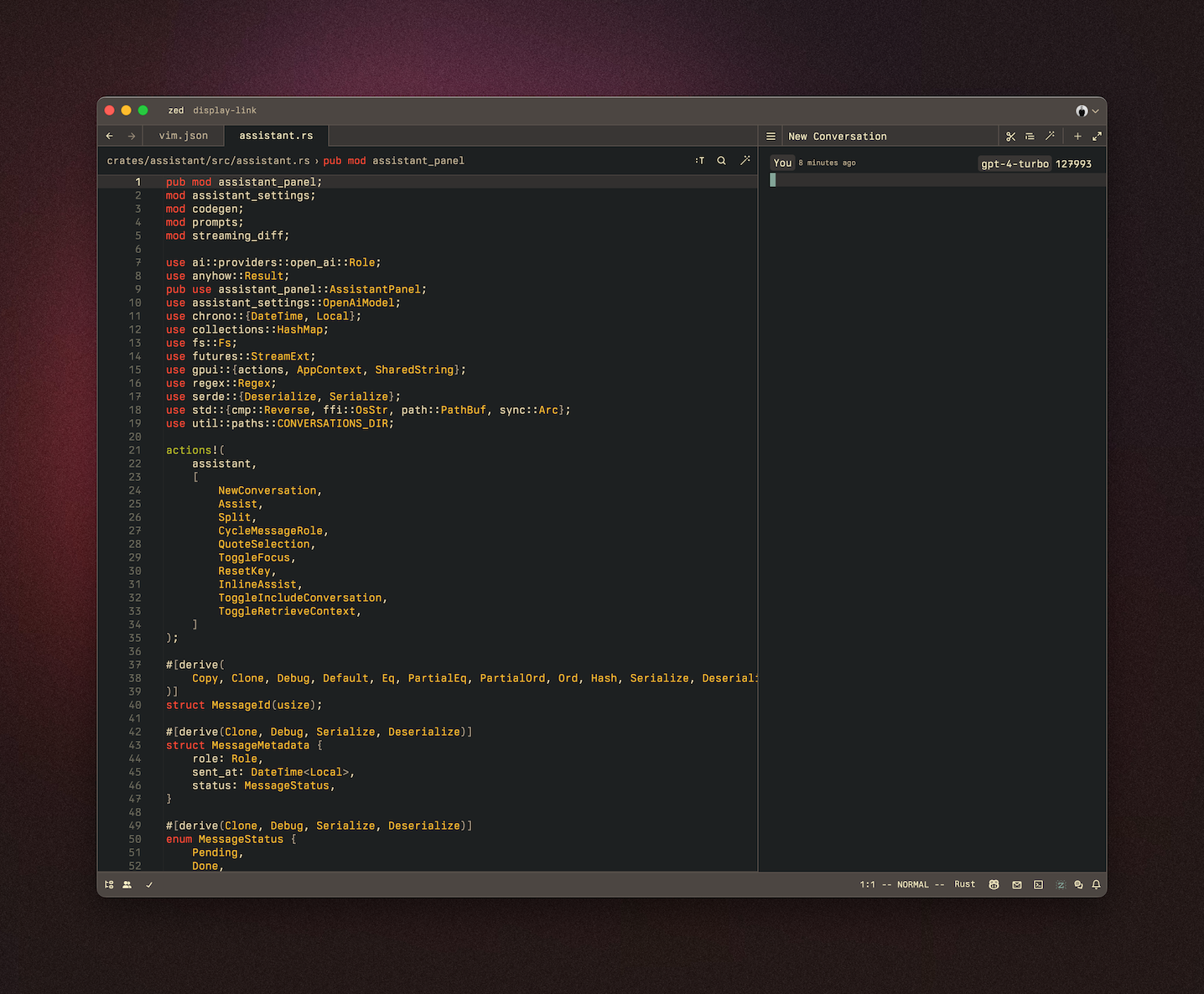

1. Create an [OpenAI API key](https://platform.openai.com/account/api-keys)

2. Make sure that your OpenAI account has credits

-3. Open the assistant panel, using either the `assistant: toggle focus` or the `workspace: toggle right dock` action in the command palette (`cmd-shift-p`).

-4. Make sure the assistant panel is focused:

-

-

+3. Open the configuration view (`assistant: show configuration`) and navigate to the OpenAI section

+4. Enter your OpenAI API key

The OpenAI API key will be saved in your keychain.

Zed will also use the `OPENAI_API_KEY` environment variable if it's defined.

-#### OpenAI Custom Endpoint {#openai-custom-endpoint}

+#### OpenAI Custom Models {#openai-custom-models}

+

+You can add custom models to the OpenAI provider, by adding the following to your Zed `settings.json`:

+

+```json

+{

+ "language_models": {

+ "openai": {

+ "version": "1",

+ "available_models": [

+ {

+ "name": "custom-model",

+ "max_tokens": 128000

+ }

+ ]

+ }

+ }

+}

+```

+

+Custom models will be listed in the model dropdown in the assistant panel.

+

+### Advanced configuration {#advanced-configuration}

+

+#### Example Configuration

+

+```json

+{

+ "assistant": {

+ "enabled": true,

+ "default_model": {

+ "provider": "zed.dev",

+ "model": "claude-3-5-sonnet"

+ },

+ "version": "2",

+ "button": true,

+ "default_width": 480,

+ "dock": "right"

+ }

+}

+```

+

+#### Custom endpoints {#custom-endpoint}

-You can use a custom API endpoint for OpenAI, as long as it's compatible with the OpenAI API structure.

+You can use a custom API endpoint for different providers, as long as it's compatible with the API structure.

To do so, add the following to your Zed `settings.json`:

```json

{

"language_models": {

- "openai": {

+ "some-provider": {

"api_url": "http://localhost:11434/v1"

}

}

}

```

-The custom URL here is `http://localhost:11434/v1`.

+Where `some-provider` can be any of the following values: `anthropic`, `google`, `ollama`, `openai`.

+

+#### Custom timeout {#provider-timeout}

+

+You can customize the timeout that's used for LLM requests, by adding the following to your Zed `settings.json`:

+

+```json

+{

+ "language_models": {

+ "some-provider": {

+ "low_speed_timeout_in_seconds": 10

+ }

+ }

+}

+```

+

+Where `some-provider` can be any of the following values: `anthropic`, `copilot_chat`, `google`, `ollama`, `openai`.

+

+#### Configuring the default model {#default-model}

+

+The default model can be changed by clicking on the model dropdown (top-right) in the assistant panel.

+Picking a model will save it as the default model. You can still change the default model manually, by editing the `default_model` object in the settings. The `default_model` object can contain the following keys:

+

+```json

+{

+ "assistant": {

+ "version": "2",

+ "default_model": {

+ "provider": "zed.dev",

+ "model": "claude-3-5-sonnet"

+ }

+ }

+}

+```

+

+#### Common Panel Settings

+

+| key | type | default | description |

+| -------------- | ------- | ------- | ------------------------------------------------------------------------------------- |

+| enabled | boolean | true | Disabling this will completely disable the assistant |

+| button | boolean | true | Show the assistant icon |

+| dock | string | "right" | The default dock position for the assistant panel. Can be ["left", "right", "bottom"] |

+| default_height | string | null | The pixel height of the assistant panel when docked to the bottom |

+| default_width | string | null | The pixel width of the assistant panel when docked to the left or right |