1# Configuring the Assistant

2

3## Settings

4

5| key | type | default | description |

6| ------------- | ------ | ------- | ----------------------------- |

7| version | string | "2" | The version of the assistant. |

8| default_model | object | {} | The default model to use. |

9

10### Configuring the default model

11

12The `default_model` object can contain the following keys:

13

14```json

15// settings.json

16{

17 "assistant": {

18 "default_model": {

19 "provider": "zed.dev",

20 "model": "claude-3-5-sonnet"

21 }

22 }

23}

24```

25

26## Common Panel Settings

27

28| key | type | default | description |

29| -------------- | ------- | ------- | ------------------------------------------------------------------------------------- |

30| enabled | boolean | true | Disabling this will completely disable the assistant |

31| button | boolean | true | Show the assistant icon |

32| dock | string | "right" | The default dock position for the assistant panel. Can be ["left", "right", "bottom"] |

33| default_height | string | null | The pixel height of the assistant panel when docked to the bottom |

34| default_width | string | null | The pixel width of the assistant panel when docked to the left or right |

35

36## Example Configuration

37

38```json

39// settings.json

40{

41 "assistant": {

42 "default_model": {

43 "provider": "zed.dev",

44 "model": "claude-3-5-sonnet-20240620"

45 },

46 "version": "2",

47 "button": true,

48 "default_width": 480,

49 "dock": "right",

50 "enabled": true

51 }

52}

53```

54

55## Providers {#providers}

56

57The following providers are supported:

58

59- Zed AI (Configured by default when signed in)

60- [Anthropic](#anthropic)

61- [GitHub Copilot Chat](#github-copilot-chat)

62- [Google Gemini](#google-gemini)

63- [Ollama](#ollama)

64- [OpenAI](#openai)

65- [OpenAI Custom Endpoint](#openai-custom-endpoint)

66

67### Zed AI {#zed-ai}

68

69A hosted service providing convenient and performant support for AI-enabled coding in Zed, powered by Anthropic's Claude 3.5 Sonnet and accessible just by signing in.

70

71### Anthropic {#anthropic}

72

73You can use Claude 3.5 Sonnet via [Zed AI](#zed-ai) for free. To use other Anthropic models you will need to configure it by providing your own API key.

74

75You can obtain an API key [here](https://console.anthropic.com/settings/keys).

76

77Even if you pay for Claude Pro, you will still have to [pay for additional credits](https://console.anthropic.com/settings/plans) to use it via the API.

78

79### GitHub Copilot Chat {#github-copilot-chat}

80

81You can use GitHub Copilot chat with the Zed assistant by choosing it via the model dropdown in the assistant panel.

82

83### Google Gemini {#google-gemini}

84

85You can use Gemini 1.5 Pro/Flash with the Zed assistant by choosing it via the model dropdown in the assistant panel.

86

87You can obtain an API key [here](https://aistudio.google.com/app/apikey).

88

89### Ollama {#ollama}

90

91Download and install Ollama from [ollama.com/download](https://ollama.com/download) (Linux or macOS) and ensure it's running with `ollama --version`.

92

93You can use Ollama with the Zed assistant by making Ollama appear as an OpenAPI endpoint.

94

951. Download, for example, the `mistral` model with Ollama:

96

97 ```sh

98 ollama pull mistral

99 ```

100

1012. Make sure that the Ollama server is running. You can start it either via running the Ollama app, or launching:

102

103 ```sh

104 ollama serve

105 ```

106

1073. In the assistant panel, select one of the Ollama models using the model dropdown.

1084. (Optional) If you want to change the default URL that is used to access the Ollama server, you can do so by adding the following settings:

109

110```json

111{

112 "language_models": {

113 "ollama": {

114 "api_url": "http://localhost:11434"

115 }

116 }

117}

118```

119

120### OpenAI {#openai}

121

122<!--

123TBD: OpenAI Setup flow: Review/Correct/Simplify

124-->

125

1261. Create an [OpenAI API key](https://platform.openai.com/account/api-keys)

1272. Make sure that your OpenAI account has credits

1283. Open the assistant panel, using either the `assistant: toggle focus` or the `workspace: toggle right dock` action in the command palette (`cmd-shift-p`).

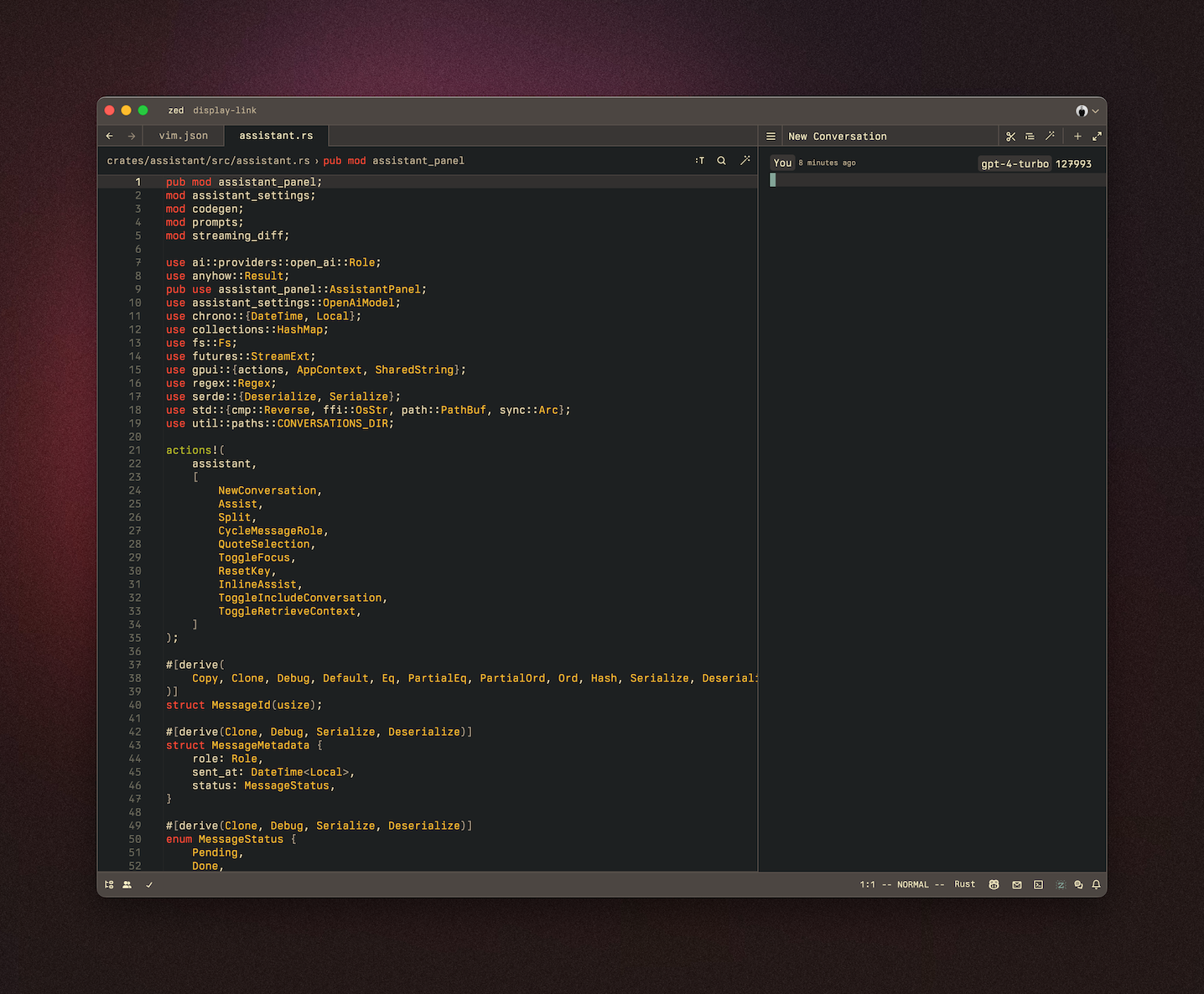

1294. Make sure the assistant panel is focused:

130

131

132

133The OpenAI API key will be saved in your keychain.

134

135Zed will also use the `OPENAI_API_KEY` environment variable if it's defined.

136

137#### OpenAI Custom Endpoint {#openai-custom-endpoint}

138

139You can use a custom API endpoint for OpenAI, as long as it's compatible with the OpenAI API structure.

140

141To do so, add the following to your Zed `settings.json`:

142

143```json

144{

145 "language_models": {

146 "openai": {

147 "api_url": "http://localhost:11434/v1"

148 }

149 }

150}

151```

152

153The custom URL here is `http://localhost:11434/v1`.